There are so many terms used for different kinds of software testing:

There’s a lot of overlap between some of these terms, and some terms such as ‘Integration Testing’ mean different things in different contexts or to different people1. Also, without some kind of mental framework, it can be difficult to understand how all these kinds of testing relate to each other and fit together, and without that understanding, how can we know for sure we’ve covered all conceivable kinds of software testing?

The aim of this post is to create a framework which can help with these things2.

The framework

This framework is going to consist of a few different roughly independent ‘axes’ along which to classify software testing, with the aspiration that together they can completely characterise any given form of software testing, at least with respect to what part of a system it tests.

A couple of the terms in the word wall suggest axes directly such as the distinction between Automated and Manual testing, and between Functional and Non-Functional testing.

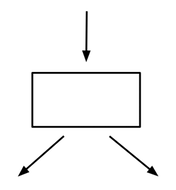

The rest of the axes require a little bit of abstraction before I can introduce them. That abstraction is the concept of a ‘service’ in its most abstract sense, as represented by its architecture diagram:

Here, the incoming arrow represents the interface of the service and the outgoing arrow(s) represent the services’ dependencies.

A service could be a Http api, that may depend on other http apis and/or a database. Or it could be a UI that may depend on backend http apis. Or it could even represent a module of Code that may depend on other code modules and/or http apis and/or databases. Or it could represent a Database that interfaces via SQL but doesn’t have any dependencies. Or it could be a combination of the above that exposes a single interface, for example a UI connected to a http api connected to a database, which we can still always classify as one of Http, UI, Code or Database3 depending on the type of interface it provides. In each case, the abstract properties of having a single interface and possibly having dependencies are the same.

Given an integrated system of services there are essentially two types of testing you can do – you can test services individually as ‘Boxes’, and you can test the ‘Arrows’ between services. Testing a service individually as a ‘box’ is the most common form of testing, where you mock out all the dependencies and test all conceivable uses of the interface, and is implicitly what we usually mean when we talk about testing a service. This can include all ‘levels’4 of software testing since a system can be partitioned into abstract services at varying degrees of coarseness. Testing an arrow on the other hand focuses specifically on the communication between two services, which includes things like contract testing5.

When testing a service – a box – there are further ways we can classify the testing based on the properties of the service.

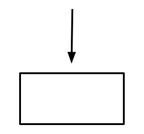

Firstly, a service may have no external dependencies as in the following diagram:

To differentiate, we could call this a ‘Leaf’ service and call a service with dependencies a ‘Branch’ service.

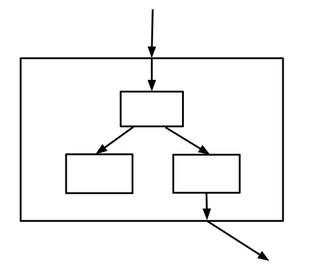

Secondly, it may be possible to break down a service into smaller component services, as illustrated in the following diagram:

Let’s a call a service ‘Atomic’ if it cannot be broken down into smaller services, and ‘Compound’ if it can. More precisely, I think we should say a service is atomic if it cannot be broken down into component services where any of the component services have the same type of interface. So for example, while a Http service can be broken down into component Code services, I think we should still classify it as atomic as a Http service. (A compound Http service would be a Http service that is a wrapper for a system of integrating Http services).

One final way to classify services is as either ‘Stateless’ or ‘Stateful’. The difference is that in a stateful service, it can not be guaranteed that the same request yields the same response, because the response depends on the state as well as the request. In testing, this means the order of tests is important or the state has to carefully initialised before each test.

Putting all these concepts together, I suggest the following set of axes for classifying software testing:

- Box vs Arrow

- Http vs Code vs UI vs Database

- Functional vs Non-Functional

- Automated vs Manual

and relevant to box testing only: - Atomic vs Compound

- Branch vs Leaf

- Stateless vs Stateful

The framework applied

Let’s see how a few of the most important kinds of testing in the word wall can be defined (or at least classified) in terms of this framework:

- Unit testing

- Automated Functional testing of an Atomic Code service6

- Component testing

- (1) Automated Functional testing of a Compound Code service.

- (2) Automated Functional testing of an Atomic Http service.

- Integration testing

- (1) Functional testing of a Compound service.

- (2) Functional testing of an Arrow between services (usually by testing a Compound of the two services connected by the arrow).

- Interface testing

- Functional testing of an Arrow between services.

- Contract Testing / Pact Testing

- Functional testing of an Arrow from a service to a Http service.

- End-to-End Testing

- Testing of a Compound Leaf UI service.

- Smoke Testing

- Minimal Functional testing of a Compound Leaf service.

- User Acceptance Testing

- Manual Functional testing of a UI service.

- User Journey Testing / Sequence Testing

- Functional sequential testing of a Stateful UI or Http service.

- Load Testing

- Non-Functional testing of a Http service.

- Usability Testing

- Non-Functional testing of a UI service.

- Types as Tests

- Automated Functional testing of Arrows between Code services.

Not all of the terms in the word wall can be defined or even classified in this framework; for example ‘Regression Testing’, ‘Monitoring and Alerting’ and ‘Happy Path Testing’ to name a few. What these terms seem to have in common is that they classify among other things the scope of the testing, whereas this framework concentrates on what part of the system is being tested, in the interest of simplicity.

One of the immediate advantages of a framework like this, is that it can help to identify where there may be gaps in an existing test suite; for example if you wonder about testing the Arrows between Code services, you’ll find that’s precisely the purpose of static types!

- …as discussed in this article on integration testing, which distinguishes between ‘broad’ and ‘narrow’ integration testing. These two interpretations are also defined later in the post after the framework is introduced. ↩

- There are already some helpful frameworks out there. I like Martin Fowler’s Software Testing Guide and particularly his summary of microservice testing strategies. There’s also the ‘Testing Pyramid’ which classifies software testing by what ‘level’ the service boundaries are defined, and also suggests an ideal proportion of tests at each level. ↩

- These are I’m sure not the only possible types of interface; for example you could consider a kafka event producer a service with a ‘kafka’ interface, that if it’s event driven may depend on other kafka services and may also depend on http apis and/or databases, but I’ve left out this example for simplicity. ↩

- These levels refer to the levels of the Testing Pyramid. Testing at different levels inevitably means there will be overlap between tests, as a service may be included in tests at multiple different levels, and as a result measuring test coverage can become a bit ambiguous. But tests at different levels are valuable and complement each other well – the lower level tests provide more coverage, and the higher level tests are more meaningful and resilient to refactoring. The Testing Pyramid suggests an ideal balance between these levels of testing. ↩

- Quite often contract testing is implemented using a ‘test double’ aka ‘stub server’ that returns dummy data, and therefore is example based. Alternatively you could have schema based contract tests that can algebraically test complete compatibility of the consumer json schema with the provider json schema. ↩

- Sometimes the term ‘Unit Testing’ is used more broadly than this and can include code services that are not strictly atomic, as long as they are sufficiently small. In Martin Fowler’s description of unit tests, this is called ‘Sociable Unit Testing’, and strictly atomic unit testing is called ‘Solitary Unit Testing’. ↩

RSS - Posts

RSS - Posts